How Data Deduplication Works

Data deduplication is technology that allows you to fit more backups on the same physical media, retain backups for longer periods of time, and speed up data recovery. Deduplication analyzes data streams sent to be backed up, looking for duplicate "chunks." It saves only unique chunks to disk. Duplicates are tracked in special index files.

In Arcserve Backup, deduplication is an in-line process that occurs at the backup server, within a single session. To identify redundancy between the backup jobs performed on the root directories of two different computers, use global deduplication.

During the first backup:

- Arcserve Backup scans incoming data and segments it into chunks. This process occurs in the SIS layer of the Tape Engine.

- Arcserve Backup executes a hashing algorithm that assigns a unique value to each chunk of data and saves those values to a hash file.

- Arcserve Backup compares hash values. When duplicates are found, data is written to disk only once, and a reference is added to a reference file pointing back to the storage location of the first identified instance of that data chunk.

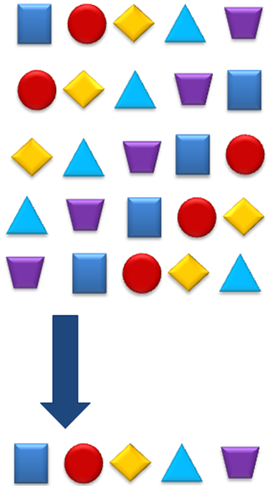

In the diagram below, the disk space needed to backup this data stream is smaller in a deduplication backup job than in a regular backup job.

With deduplication, three files are created for every backup session:

- Index Files (Metadata files)

- Hash files--store the markers assigned to each redundant chunk of data.

- Reference files--count hashes and store the address in the data files that correspond to each hash.

- Data files--store the unique instances of the data you backed up.

The two index files together consume a small percentage of the total data store so the size of the drive that stores these files is not as critical as its speed. Consider a solid state disk or similar device with excellent seek times for this purpose.

During subsequent backups:

- Arcserve Backup scans incoming data and breaks it into chunks.

- Arcserve Backup executes the hashing algorithm to assign hash values.

- Arcserve Backup compares new hash values to previous values, looking for duplicates. When duplicates are found, data is not written to disk. Instead, the reference file is updated with the storage location of the original instance of the data chunk.

Note: Use Optimization for better throughputs and decreased CPU usage. With Optimization enabled, Arcserve Backup scans file attributes, looking for changes at the file header level. If no changes were made, the hashing algorithm is not executed on those files and the files are not copied to disk. The hashing algorithm runs only on files changed since the last backup. To enable Optimization, select the Allow optimization in Deduplication Backups option located on the Deduplication Group Configuration screen. Optimization is supported on Windows volumes only. It is not supported for stream-based backups, such as SQL VDI, Exchange DB level, Oracle, and VMware Image level backups.

When you must restore deduplicated data, Arcserve Backup refers to the index files to first identify and then find each chunk of data needed to reassemble the original data stream.